Can Anybody Hear Me? Automated Testing for Multi-User Chat

Automating Testing for Multi-User Flows

Automating test cases is key to reducing the time to release new features, as well as preventing regressions in our code base. At Skillz, we’ve found great success in automating more and more of our test suites, enabling us to release new features with confidence and catching regressions with plenty of time to fix them before a release. Our user interface (UI) automation runs our internal test apps on emulators and simulators by logging into test users and accessing elements on the devices. This allows us to test critical flows and new features, provided our engineers prepare them for automation.

However, for all its strengths, UI automation struggles to deal with flows that involve multiple users. This normally doesn’t pose a problem as the Skillz experience is primarily asynchronous, but for our growing social feature (multi-user chat), this could cause potential issues.

The Problem

Features like our chat service are often built upon websockets, which require a live open connection to be maintained in order for them to be used. Unlike a one-off application programming interface (API) call which can be easily automated, these live connections must be maintained and made to handle incoming data without making a request. Our chat service uses a custom XMPP setup with Skillz-specific extensions. This chat structure makes simulating multiple users difficult, as our software development kit (SDK) doesn’t support multiple users logged in at the same time, and our automation test suite doesn’t have the same chat client logic available that the SDK does.

So how do we automate chat features? Let’s take a look at the following test case:

“Given I am a user logged into Skillz, when I accept a new friend request I can receive messages from that user in real-time.”

One approach to this test case could be the following:

- Pre-generate two Skillz user accounts

- Log into one of the user accounts and connect to chat

- Send a friend request to the second user

- Switch to the second user account

- Open chat on the second user account

- Accept the request on the second user account

- Send a message to the first user

- Switch back to the first user

- Open chat and check the direct message

Despite doing all of these steps, we miss a core part of the test case where the message is received in real-time. This user-switching approach prevents us from doing tests against live users with automation, in addition to adding unnecessary time to each test. This quickly becomes unsustainable. Our Appium-based testing framework doesn’t make running a test that involves multiple users easy, as we need multiple users in order to test chat. The logical next step is to ask, “How can we better simulate this test?”

Let Them Eat Cake: The Headless Chat User

The solution to our problem is what we refer to as the “headless user” – a user connected to our chat service that we can use to send messages to the chat server alongside friend requests and more, without any of the UI logic. It solely knows about the actual client-server connection logic and can maintain our socket connection. It can handle incoming data, but doesn’t know the business logic for what to do with the data.

To create this headless user, we had to identify where the chat client we are using could be split out into functionality vs. view. We separated out the logic that has anything to do with opening and closing the websocket, as well as sending or receiving messages from the chat servers. At first, our headless user started off as a client that could open the connection, join rooms, send messages, and invite friends.

With this headless user, the example test case above looks more like this:

- Pre-generate two Skillz user accounts

- Log into the first user account and connect to chat

- Create a headless user for the second client

- Send a friend request from the headless user to the first user

- Observe the friend request on the first user’s device and accept it

- Send the message from the headless user

- Observe the message appears in real time on the first user’s device

We managed to cut out switching users twice AND ensure we are testing the case properly with receiving messages in real-time.

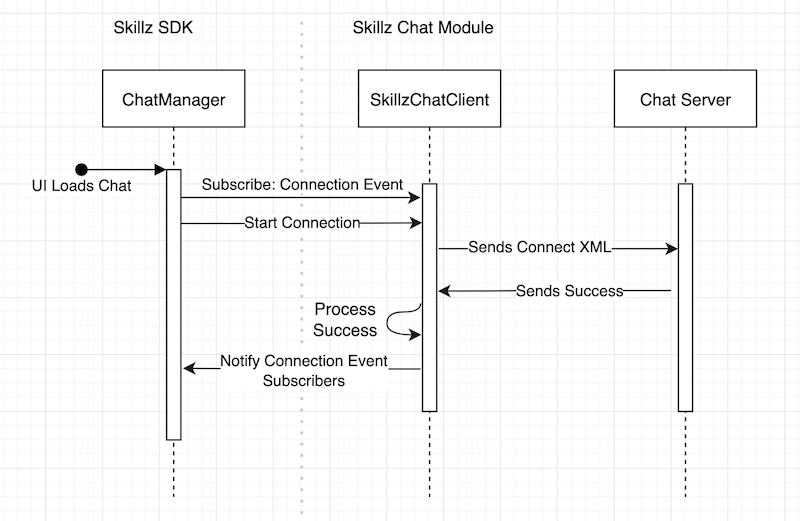

So how is this headless user made? When creating this, it was imperative that we could handle asynchronous events coming from the server, as most of the Skillz chat feature is reacting to incoming events. To solve this, we set up a list of events that can be listened to on the headless user. This includes events such as connection success, messages received, and more. An example of how this works is outlined below:

In this case, someone using our library only needs to know that they should listen for connection events and tell the client to start the connection. The headless chat module is then responsible for formatting outgoing data and handling incoming data on the websocket. This headless chat module has already been put to use in our UI suite to build automation around our chat feature. This allowed us to enable chat in more of the games on the Skillz platform with an even greater confidence, as seen in Solitaire Cube, Blackout Bingo, Big Buck Hunter, Dominos Gold, Pool Payday, Cube Cube, Bubble Shooter Arena, Strike! By Bowlero, Diamond Strike, and Mixmaster Showdown.

Looking Forward

We have already begun work on building and using this new module to add chat to our internal testing frameworks, and have added the features that our SDK has that weren’t in our initial proof of concept. With a clearly defined API, this module makes it easier for Skillz to add chat to our other products.

With that in mind, we took a number of considerations into account when designing this module:

- Minimizing external knowledge on the inner workings of chat needed

- Defining a clean interface with simple method signatures

- Allowing for future feature development with an expandable event system

One other benefit of building the chat module in this manner is that we’re able to prepare our whole suite of applications that use this for changes to our chat infrastructure. If we were to make updates to our XMPP extensions or even replace our existing chat servers with new technology, we could now make the change in one place. By using these interfaces, in theory, our whole selection of apps can be updated by only making the changes in one place and updating the dependencies in the products that use it.

If working on projects such as these interests you, we encourage you to check out our open positions here!